Machine Learning Self-Driving Car Simulation

Navigating a simulated world with computer vision, capturing license plate images and processing with trained neural network

Context:

A brand new course added to Engineering Physics this year, ENPH 353 is a project based competition course where teams of two were challenged to program a robot capable of navigating a simulated world, avoiding obstacles, and collecting license plate information from various parked cars. Correctly labelling the location and license plate number of parked cars would yield points, whereas any off-road travel or collisions with world elements would deduct points.

Project report if curious: UBC Engineering - Autonomous simulated car Key Skills:- Machine learning

- computer vision

- Image recognition and processing

- Reinforcement learning

Key Technology:

- Linux

- Gazebo

- ROS

- OpenCV

- TensorFlow

- Keras

- Git

- Python

The world:

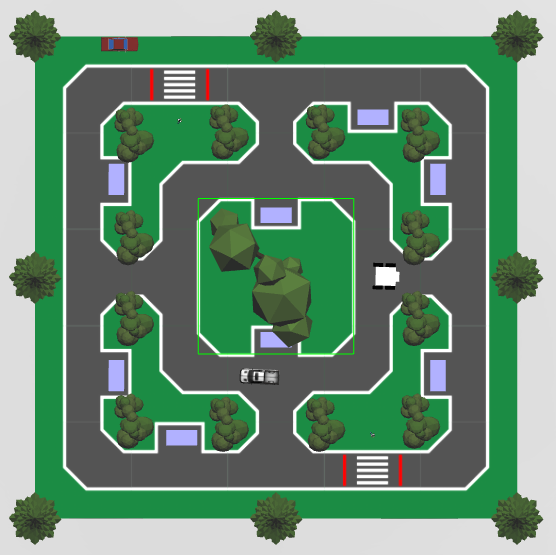

The following image captures a top view of the gazebo simulation world that our robot operated in. Our robot (in white) is shown at its starting position on the road and the "parked cars" are represented by the blue rectangles placed throughout the course at various "parking locations". Our objective was to navigate the roads, and collect the license plate information of all parked cars along our path.

Sensory Input:

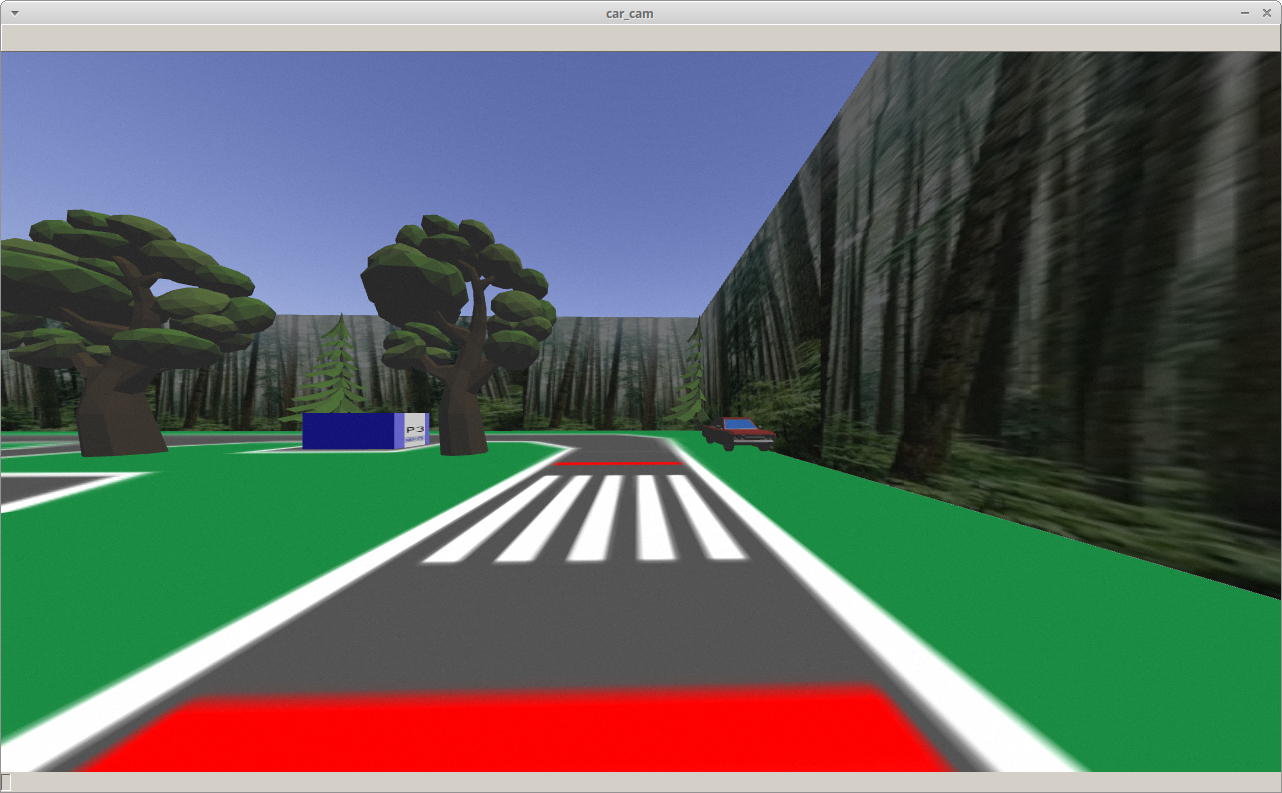

The operation of our robot relied solely on the camera input feed is received from the virtual world. Within the gazebo simulation, a camera mounted on the top of the robot facing forwards continuously fed our system with an image feed. The following image shows an example of the frame we would receive back.

Ros Architecture:

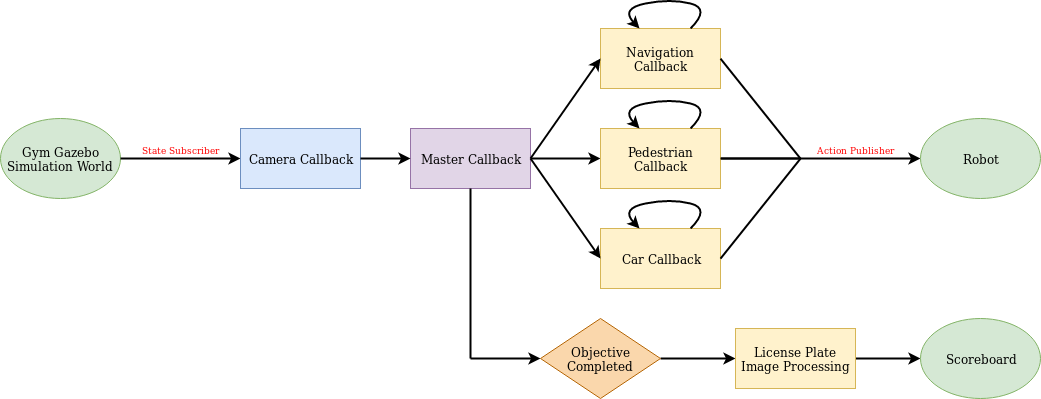

Since the only input to our system was the camera feed, our system needed to be capable of transforming an input image into output commands through a series of callbacks and algorithms. At a very high level, this architecture is summarized in the following diagram.

Each "callback" is essentially an asynchronously running method responsible for handling a specific aspect of our robot operation. For example, our "Car Callback" handles capturing images of the parked cars, as well as avoiding the roaming truck which circles the middle track. Our "Navigation Callback" uses the camera feed to stay centered within the road and to properly stop at crosswalks. After we have successfully navigated the course, our system has captured multiple images of each parked car we have passed.

After capturing images of all parked cars, an "Objective Completed" flag withing our system gets set True. This starts the execution of our License Plate Image Processing Callback.

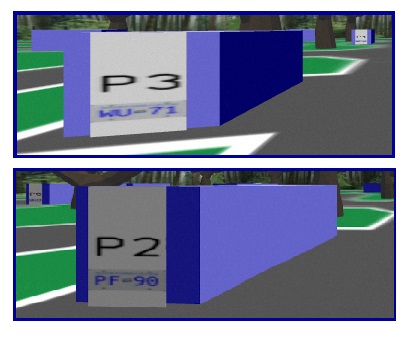

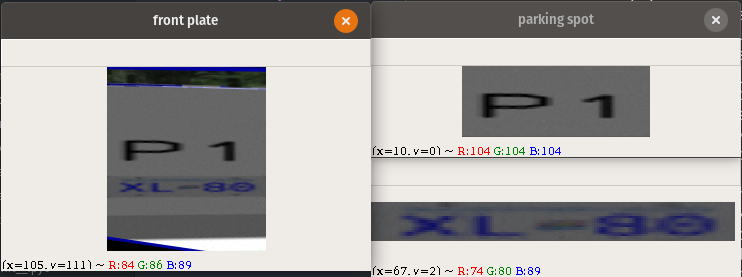

License Plate OCRThe captured images are first filtered and cropped to identify the portion of the image which has the parking location and plate number information. Below, the images show an example of the unfiltered captured images, followed by the process of locating the areas of the image which hold the information we are looking for. It is worth noting that some of the images we capture are not completely in focus. Regardless, our algorithm was able to correctly identify and crop the images for further processing. After filtering the images, we send them through our pre-trained a neural network which outputs the parking location and license plate number to the terminal and scoreboard with an accuracy of 98%.

The Big Picture:

The following video shows a captured demo run of our finished robot. A top-down view on the right portion of the screen shows the robots progress through its path, whereas the left portion of the screen shows the world through the eyes of the robot. There are a few other key points worth noting below.

Within the gazebo simulation world, we had to deal with the constraint that output commands were discrete in nature, meaning there was only a finite set of commands that we could give. In our case, this meant that we could not publish "go forward" and "turn left" commands at the same time. The result is that our robot looks very jittery as it travels. It's worth noting that this is simply a limitation of the platform the simulation was running in.

Bounding boxes can be seen throughout the video. Parked cars are represented with blue bounding boxes, pedestrians with red (or green) depending on their position, and the roaming truck with a yellow bounding box. The identification of these objects of interest was what we used to make decisions within our code.

At the very end of the video, all captured license plates are printed to the terminal in the top left corner of the screen.

Achievements- lastet night: 3:13 am

- Biggest dream that was give up on: Front to back Reinforcement Learning.

- largest cup of coffee: Approximately 1L

- Fastest sim runtime: Realtime Factor of 11